Can human identify AI-generated (fake) videos and provide grounded reasons? While video generation models have advanced rapidly, a critical dimension – whether human can detect deepfake traces within a generated video, i.e., spa- tiotemporal grounded visual artifacts that reveal a video as machine generated – has been largely overlooked.

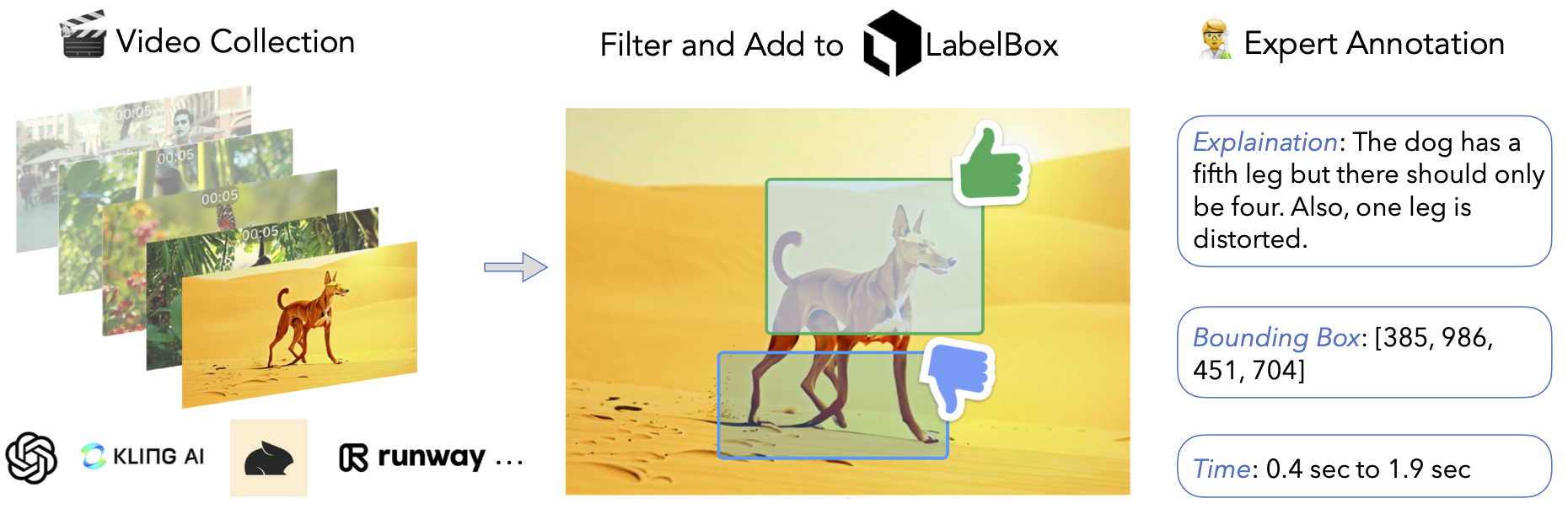

We introduce DEEPTRACEREWARD, the first fine- grained, spatially and temporally aware benchmark that annotates human-perceived fake traces for video generation reward. The dataset comprises 4.3K detailed annotations across 3.3K high-quality generated videos. Each annotation provides a natural-language explanation, pinpoints a bounding-box region containing the perceived trace, and marks precise onset and offset timestamps.

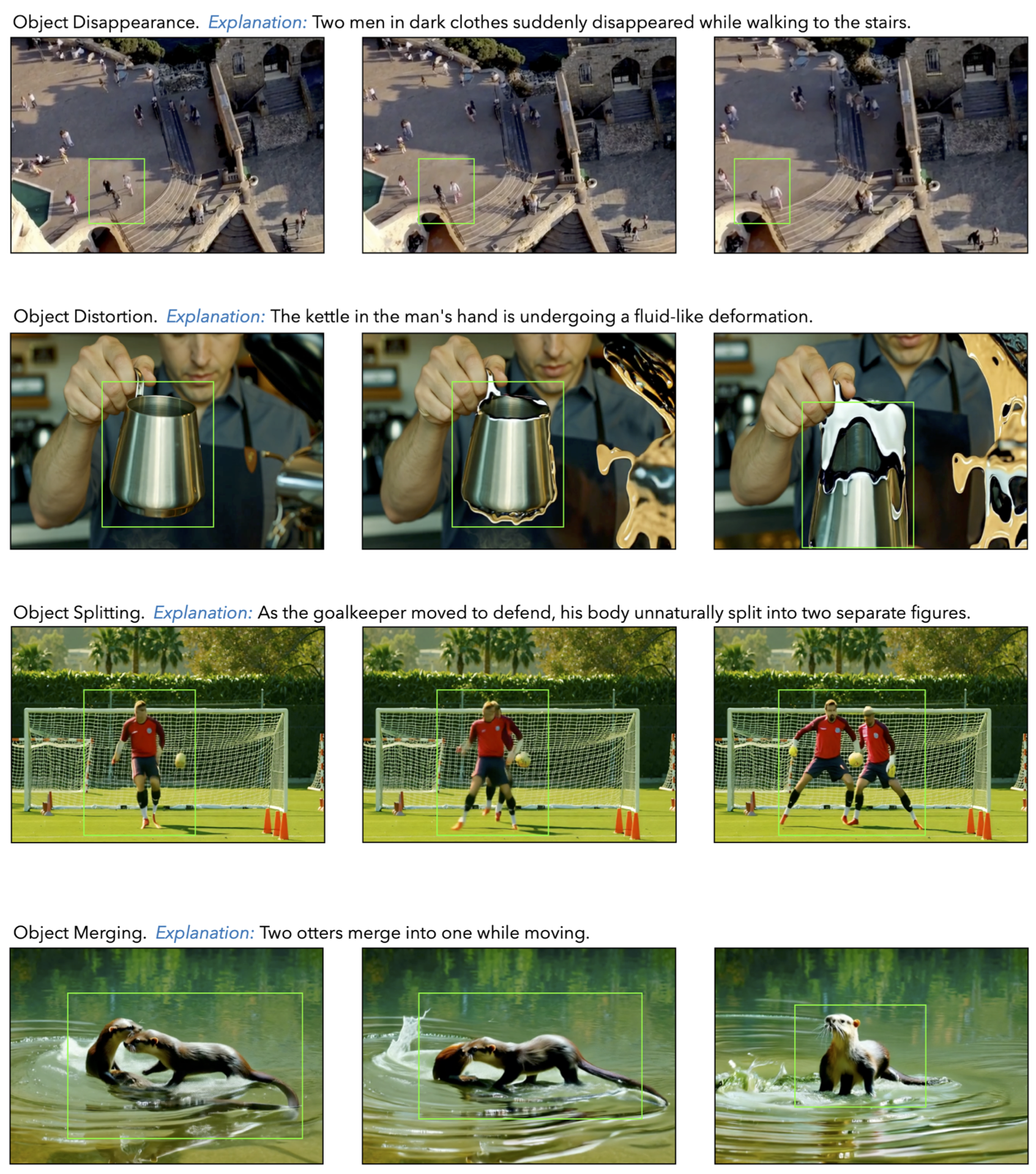

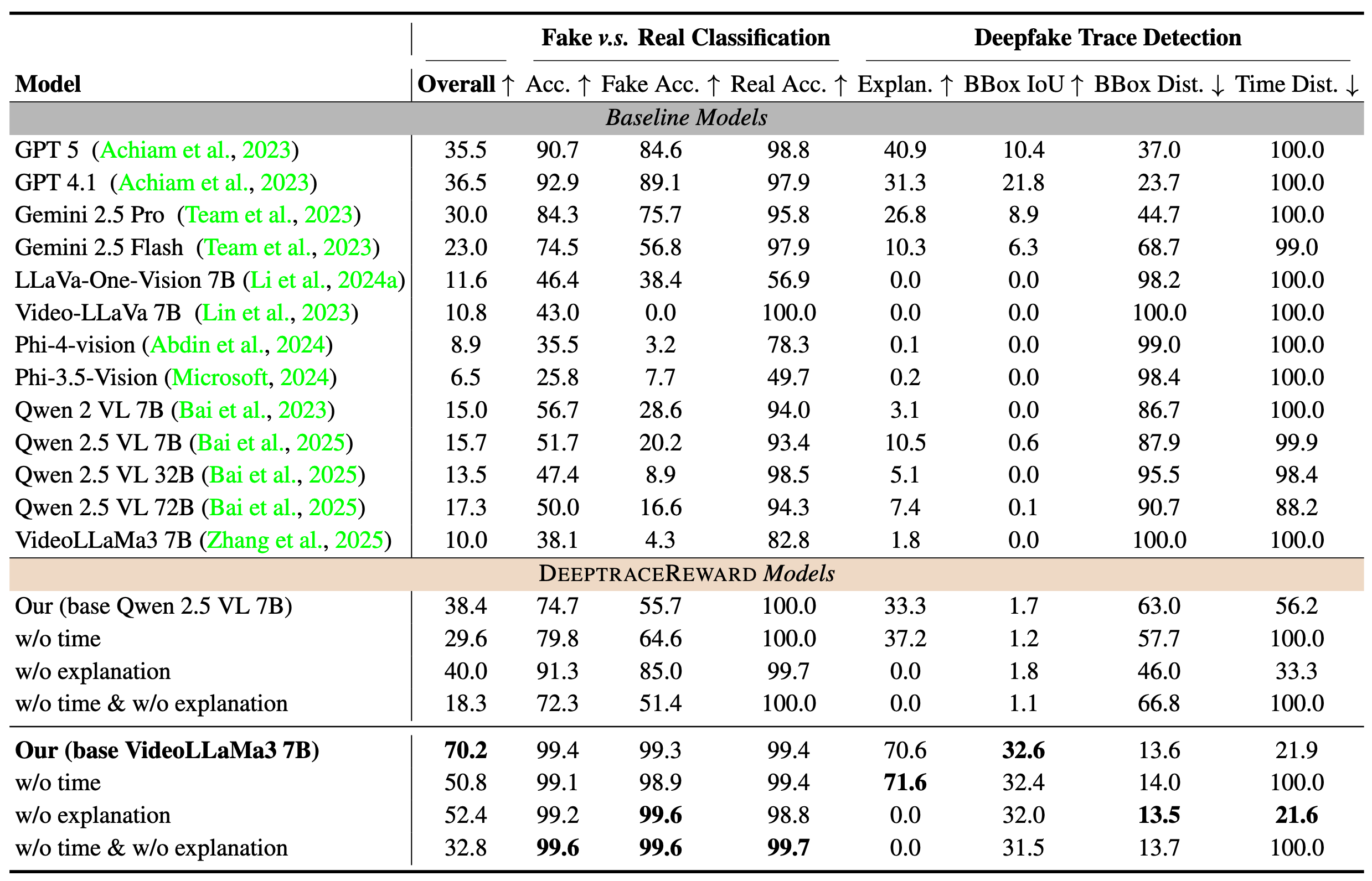

We consolidate these annotations into 9 major categories of deepfake traces that lead humans to identify a video as AI-generated, and train multimodal language models (LMs) as reward models to mimic human judgments and localizations. On DEEPTRAC- EREWARD, our 7B reward model outperforms GPT-5 by 34.7% on average across fake clue identification, grounding, and explanation.

Interestingly, we observe a consistent difficulty gradient: binary fake v.s. real classification is substantially easier than fine-grained deepfake trace detection; within the latter, performance degrades from natural language explanations (easiest), to spatial grounding, to temporal labeling (hardest). By foregrounding human-perceived deepfake traces, DEEPTRACEREWARD provides a rigorous testbed and training signal for socially aware and trustworthy video generation.

Two key criteria in our video collection process is to include only high-quality generated videos that contain motion. The first criterion is motivated by annotation challenges observed in low-quality videos generated by many open-source models, which tend to be ambiguous, extremely short (e.g., only 1 second), or entirely distorted across all frames -- making them unsuitable for fine-grained deepfake trace identification.

The second criterion comes from our initial observations, that most retained videos after manual filtering depict dynamic scenes involving object or human movement. This bias is deliberate: artifact patterns such as unnatural trajectories, object distortions, and sudden blurring are far more likely to emerge in motion-rich scenarios than in static scenarios, which rarely exhibit consistent visual anomalies. These insights guide our video selection and annotation strategy, which prioritizes movement-centric contexts where fake clues are more prevalent.

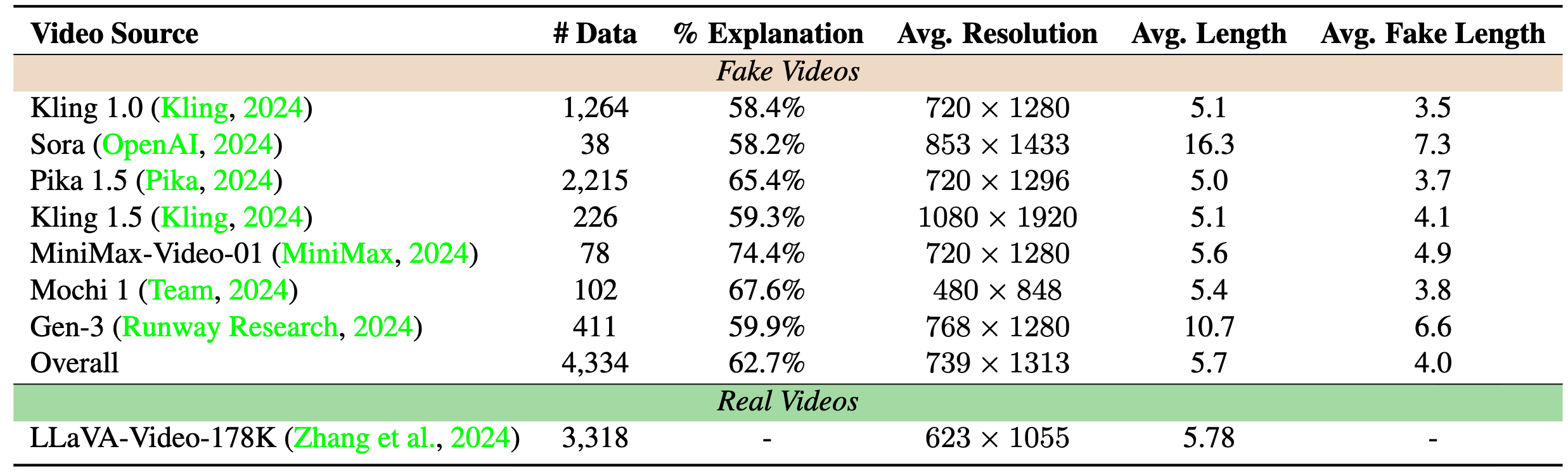

The dataset consists of 4,334 fake videos generated by six state-of-the-art T2V models and 3,318 real videos sourced from LLaVA-Video-178K (Zhang et al., 2024) for training. For both sources, we report: the number of videos, the proportion with human-written explanations, average resolution (mean height and width), average video length (seconds), and average length of annotated fake clues (based on start/end timestamps). The dataset features substantial diversity in both resolution and temporal duration across models.

To Add Soon.